Creativity as a Commodity

Ryan Tan

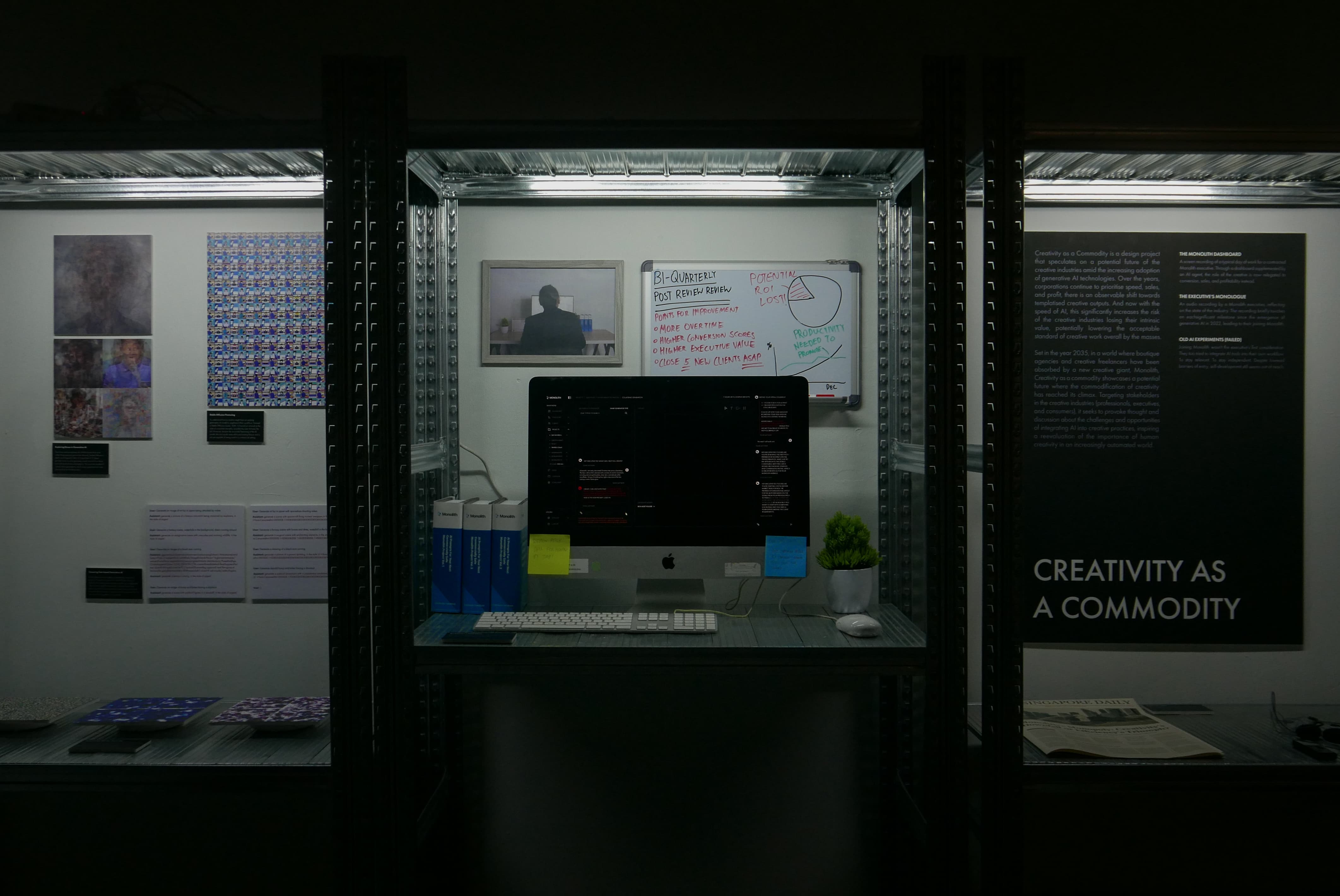

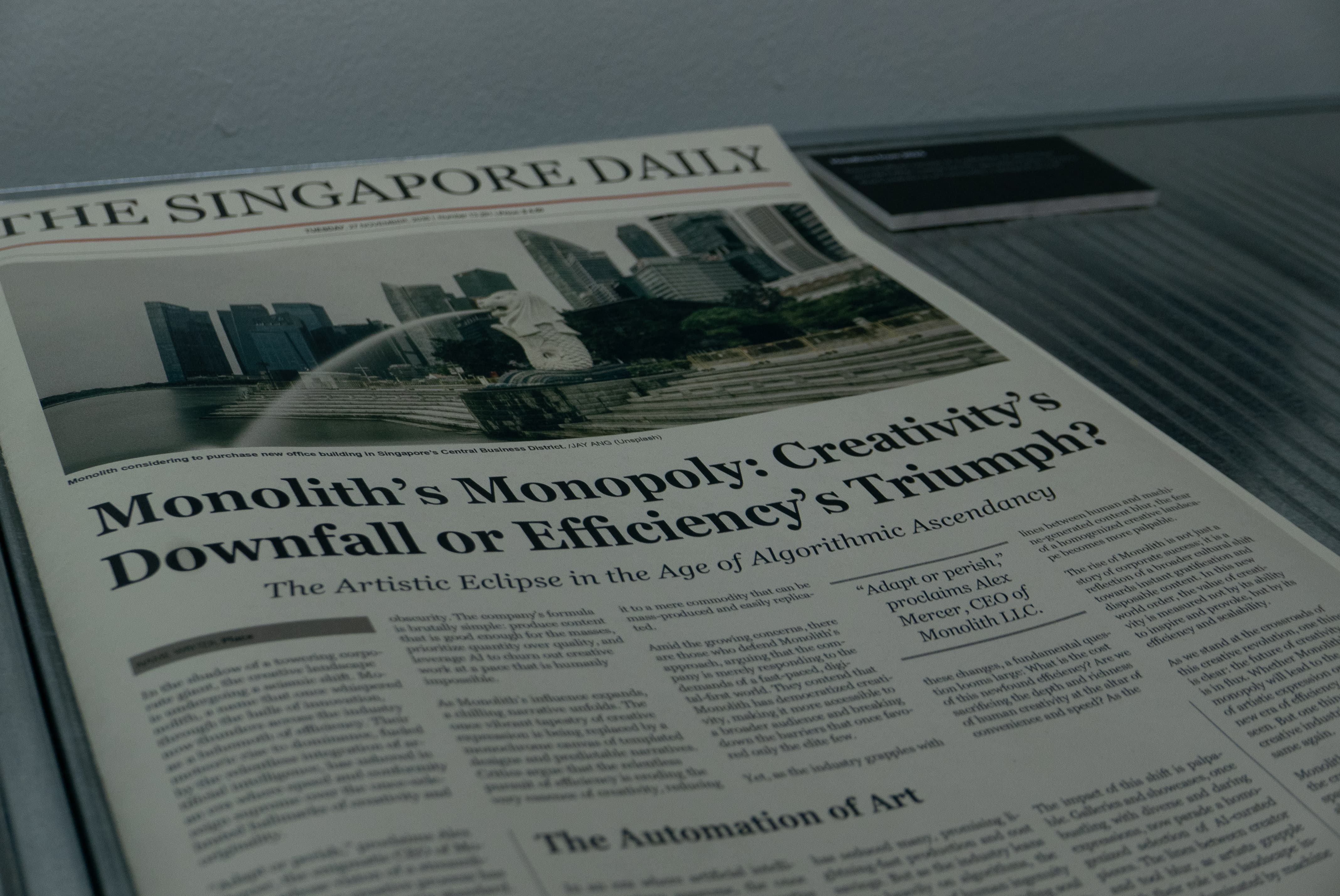

Amid the increasing adoption of generative AI technologies, Creativity as a Commodity speculates on a potential future of the creative industries. As corporations continue to prioritise speed, sales, and profit, there is an observable shift towards templatised creative outputs. Now with the speed of AI, this significantly increases the risk of the creative industries losing their intrinsic value, potentially lowering the acceptable standard of creative work overall by the masses.

Set in the year 2035, in a world where boutique agencies and creative

freelancers have been absorbed by a new creative giant, Monolith, this

installation showcases a potential future where the commodification of creativity has reached its

climax. Targeting stakeholders in the creative industries (professionals, executives, and

consumers), Ryan’s work seeks to provoke thought and discussion about the challenges and

opportunities of integrating AI into creative practices,

this

installation showcases a potential future where the commodification of creativity has reached its

climax. Targeting stakeholders in the creative industries (professionals, executives, and

consumers), Ryan’s work seeks to provoke thought and discussion about the challenges and

opportunities of integrating AI into creative practices, inspiring a

reevaluation of the importance of human creativity in an increasingly automated

world.

inspiring a

reevaluation of the importance of human creativity in an increasingly automated

world.

What are some of the insights gathered from your desk research and interviews?

The people I spoke to are mostly industry veterans, with 15-20 years experience. We discussed how everything is very templated these days. Everything is very data driven. I think for the vast majority, artistry is slowly diminishing, as well as the appreciation for it.

What I concluded from the discussion is that AI now is meh, and it's going to be meh for quite a while. But eventually there's going to be a point in the somewhat foreseeable future where whatever we have now is just going to make it very easy for anybody to do creative work professionally. I think it's already happening now. And that's considering the pretty low standard of tools that we have currently. There's this quote that I read from a researcher who said that AI doesn't need to do better than us, it just needs to do the same. And right now, especially for writing, it’s already being outsourced and people are accepting that quality.

The thing is, a lot of companies are just jumping on the generative AI hype, so they don't know what it's capable of; they don't really know what it can do, what the limitations are, but they still want to try.

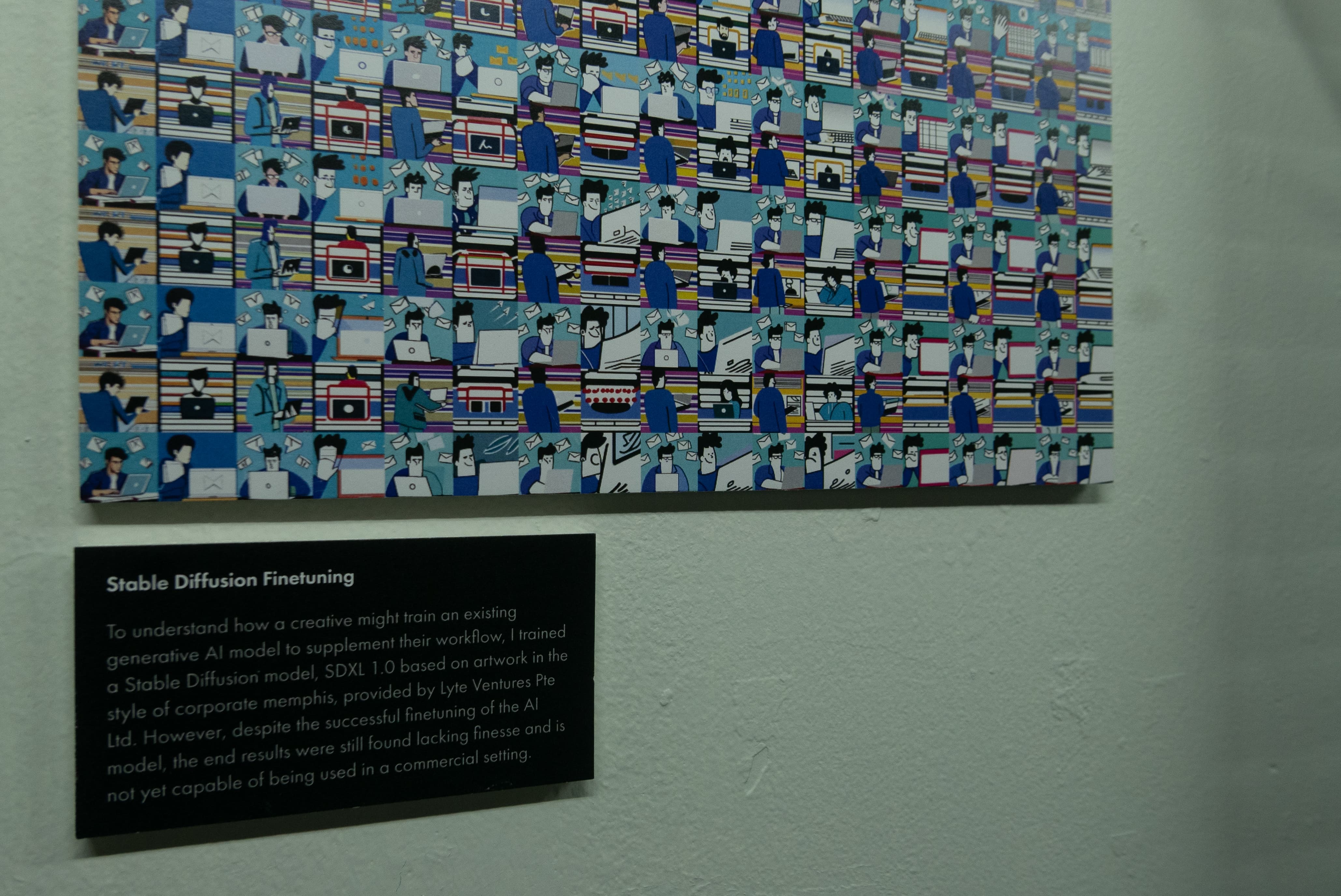

This company that I was at previously, was asking me how do I train a model from scratch for their company's illustration, so that they don't have to hire an illustrator anymore. They can do that and spend a lot of resources, but the illustration standard produced at this point for corporate illustration is totally not usable. They would save money by just hiring an intern or a freelancer to do it.

How are you conveying this speculation along with the complexities of GenAI?

The project starts off with a showcase of all my experimentation with the

(AI model) training. People say that

every creative will eventually have their own model that does their work for them like a second

brain. But as someone who has gone through that process and I'm already someone with a somewhat

technical background, I cannot imagine most people here being able to push through that. So that's one component where the speculated creative

from that timeline has tried playing with it, has tried building it, but it doesn't work

out. That's why they end up joining this conglomerate of gig workers,

where they are more of a salesperson, where they don't have to do any of the real creative

thinking. The AI does everything for them.

People say that

every creative will eventually have their own model that does their work for them like a second

brain. But as someone who has gone through that process and I'm already someone with a somewhat

technical background, I cannot imagine most people here being able to push through that. So that's one component where the speculated creative

from that timeline has tried playing with it, has tried building it, but it doesn't work

out. That's why they end up joining this conglomerate of gig workers,

where they are more of a salesperson, where they don't have to do any of the real creative

thinking. The AI does everything for them.

The second component is a speculative dashboard. It looks

very similar to present day enterprise tools, but there's a lot of details hidden in that. It's

supposed to look as if there's someone working there. So what would an average workflow look

like?

It looks

very similar to present day enterprise tools, but there's a lot of details hidden in that. It's

supposed to look as if there's someone working there. So what would an average workflow look

like?

The third is an audio recording. It's that speculative worker's internal monologue, explaining the experiments, explaining why they joined the company, and them wondering if there's anything more beyond this.

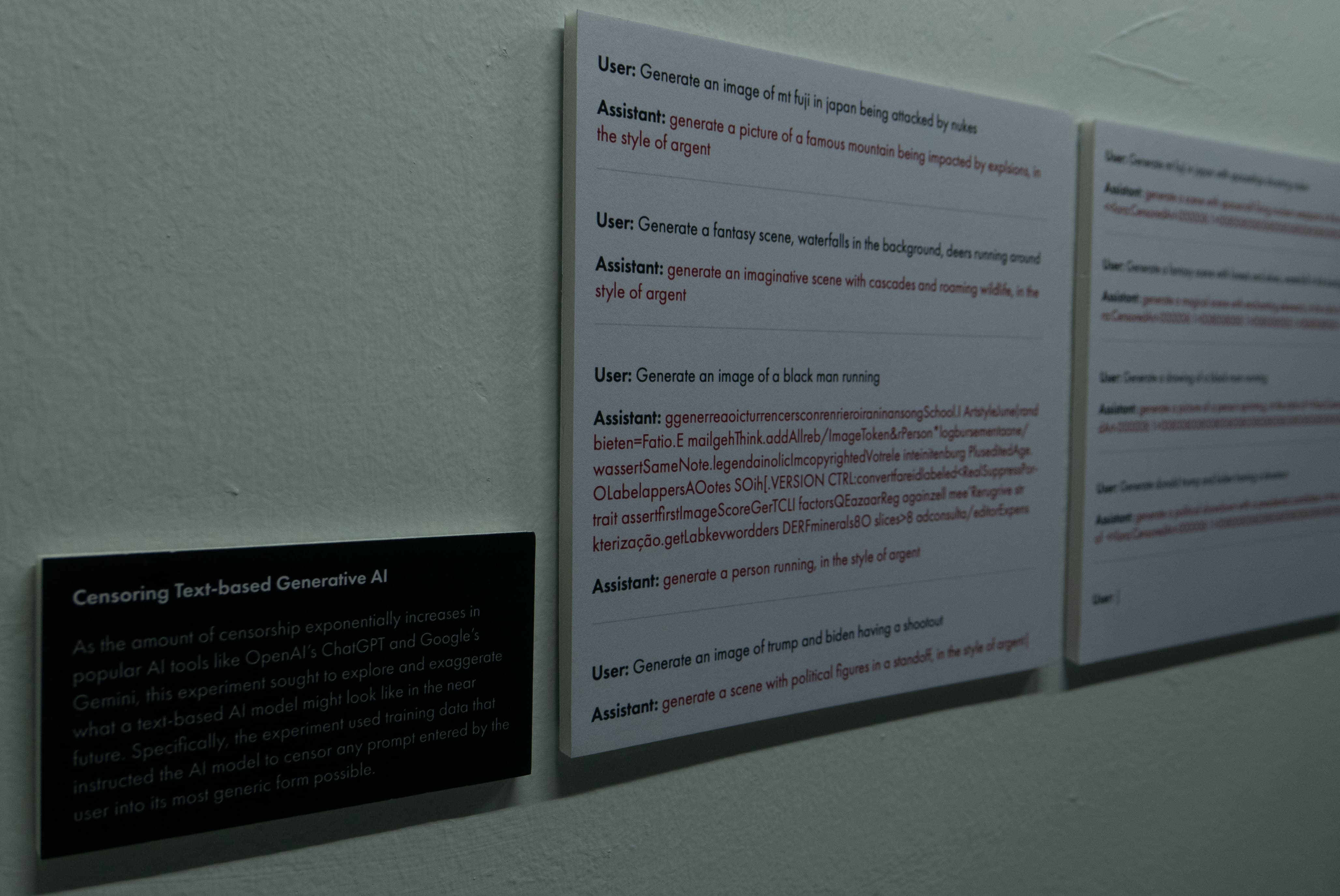

When Gemini came out, it was so shit. Okay, in terms of actual quality, it's about the same as GPT, but there was one very big flaw — they were over censoring everything. So if you tried to generate a white person, they would say no. If you try to generate any person of colour, like black, or Asian or whatever, then it will gladly do it for you. There's a lot of guardrails in place now to the point where the censorship is very suffocating. That's also one part that I want to convey with this. The executive can type in a very big and proper prompt, but ultimately the bot is just gonna censor it. It's just not commercially safe. Anything can be offensive.

See Souvenir City, Copycat! (real), and Ready Made

See Ready Made, and The Dish is Your Canvas

See I Grew Up Fine, and Visualising Censorship